Contents

- Introduction

- Core Best Practices

- Tracking Industry Trends

- Best Practices and Your Career

- Conclusion

1. Introduction

Software is a fast-paced industry. The lifespan of some skills actually seems to be decreasing over time; languages and frameworks have a limited lifespan. One of the most important habits to develop, therefore, is maintaining an understanding of current ‘best practices’. Best practices are patterns of techniques, assistive technologies, habits, and platforms that can aid a software development team in producing higher quality software more efficiently.

Best practices change depending on the time and environment in which code is produced. The first company I ever interned at produced fax-routing software for giant companies. The deployment process may have involved sending an engineer on-site with a hard drive to transfer a new version. The company I currently work for may build to AWS hosted servers several times daily depending on how many code changes have been committed in a particular day. This is an example of how ‘time to release’ has been compressed over the last 25 years to become virtually instantaneous in some environments. As such, best practices for managing very rapid changes have also needed to evolve.

When using best practices, code support and technical debt are less onerous for both individual programmers and companies as a whole. Code built with best practices tends to have a longer lifespan, as well as cost less to maintain. Additionally, inheritance of this code is more likely to be a workable solution to problems the code was not originally designed to solve. And, perhaps most importantly to an organization, developer turnover/responsibility sharing are much easier. While some developers code for job security, coding for your own obsolescence is better for your reputation and code reliability.

Core best practices are the foundation of a good programmer’s reputation. The first and foremost best practice is actually quite illogical: write less code. This is reinforced by making environments — and interactions between environments — as simple and reliable as possible. While doing this, try to avoid making basic security mistakes. Then make a habit of unit testing, because everyone likes to sleep… and if you don’t write unit tests you don’t get to sleep as reliably! Finally, if you can, set up an integrated building and testing environment. It has become a fundamental part of any best practice approach.

Remember, though, that all of these practices are going to change over time and evolve. So being aware of industry trends and remaining ahead of the curve is fundamentally important to knowing which habits and tools you should adopt/foster or let atrophy.

Thus, as an experienced developer, staying aware of current best practices — and combining ‘what the cool kids are doing’ with what you already know — is an essential skill. My personal primary sources for this information are job postings, stack overflow surveys, and various forum boards. But knowing what’s going on, and applying it, are separate tasks.

Generally, learning to apply best practices is a more onerous task than knowing what they are. The time involved in relearning a habit (e.g. remembering to use sufficient comments), or rebuilding an environment that is currently comfortable, will often seem costly or frustrating to maintain up-front. And, sadly, it is. But there are many ways to learn to apply new best practices, and it is definitely worth doing.

2. Core Best Practices

Regardless of the churn in what’s best to do, some things will always be core best practices to which most developers will agree on:

- Write less code

- Make environments as simple as possible

- Avoid standard security issues

- Write basic unit tests

These four practices are at the core of having more maintainable and reusable code.

Write Less Code

Codebases tend to bloat. When issues are encountered, they tend to be fixed as quickly as possible, with minimal impact on running, working code. I have worked at places where critical chunks of code were surrounded by small branches handling stakeholder issues. This, of course, is a tradeoff.

Old code often has structural issues limiting its expansion or re-implementation, but at the same time contains a wide array of extremely specific features. Thus, when a codebase is bloated, it is bloated with fixes to customer-facing or architecture-related issues. As such, trashing your whole codebase is always more expensive than it looks, and you want to work very hard to keep minimalistic code/environments.

The most important best practice as a developer is to write less, more intelligently compartmentalized, code. As used to be drilled into me as a kid: Reduce, Reuse, Recycle. Reduce the amount of code you write, reuse your own and other programmers’ regularly, and recycle it into more hard drive space when you can.

To help you do this, read other people’s code every once in a while. Your code will improve, if you read it critically, and their code will improve if you mention the issues you see in it. Reading with the intent of making small modifications (and even making them) will make this a much more meaningful task, so I do this as time allows. Note that if you’re doing unscheduled cleanup in a professional setting, pushing patches to production code is often frowned upon. I suggest talking through what you’re doing with the official maintainer in advance.

Learning ‘Design Patterns’ may or may not help you organize your code more effectively, but finding out how academics think solutions to common problems should be organized is a good way to give you skeletons for future organization. Finally, on code structure, something I feel I should always mention is: if you think of something, and are sure it’s brilliant, you’re most likely wrong.

“Premature optimization Is the root of all evil” — Donald Knuth

As someone who maintained a codebase written by a developer who believed in his own magical powers, I can personally attest to the fact that they failed him fairly regularly. Fundamentally, most code is written a layer or two of abstraction above compilers.

Compiler writers are performance and logic professionals, focusing on assembly-level optimizations of code. When, in the higher-level languages 95%+ of professional programmers use, you are worried about optimization, you are (generally) making a mistake. If you’re going beyond programming with good fundamentals, what you may want is a more appropriate environmental setup.

Environments should be simple and solid

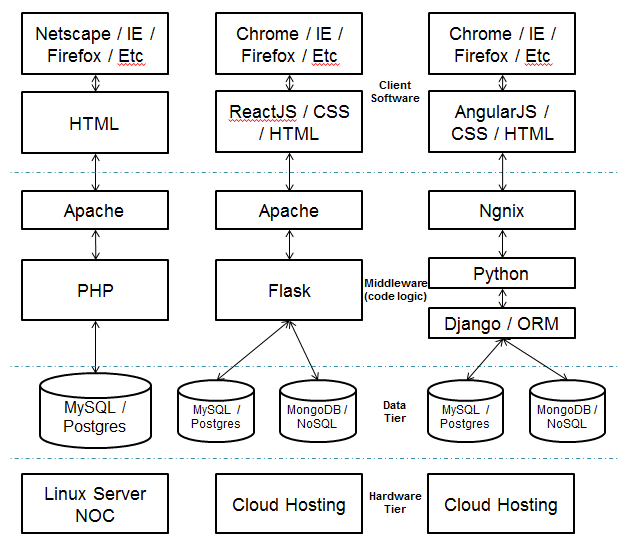

The more unique and complicated components a system has, the harder the entire system is to understand and refactor. Thus, modern best practices tend to focus the unique code in a system into loosely coupled parts, and these loosely coupled parts are as simple to work with as possible. Take these three environments, for example:

On the left is a standard LAMP stack. For ten years, the primary programming stacks for full-stack web development were Linux ➡ Apache ➡ MySQL ➡ PHP. This stack includes everything you need to create a server-side web application that shows the user a static HTML page, and there are still people delivering data with this stack today.

The middle software stack is a more modern approach. So modern it eschews an ORM and has developers writing raw MongoDB/SQL queries. This decision was made to increase the speed of development, at the cost of some of the features ORMs include. The front end of this software stack includes React, which is essentially a whole compiling development environment of its own that uses npm to build to useable code. This contrasts with the (comparatively) old-school environment’s inclusion of Javascript/Silverlight in individual pages.

The software stack on the right is even more compartmentalized than the other two. Django’s Rest Framework is probably used to interact with an Angular client using the MVC design pattern. Django has an ORM built into it, and that ORM can talk to whatever database you’re using as well as MongoDB. In this example, Ngnix is chosen for being slightly lighter than Apache in some ways. And this example, like the previous more ‘modern’ example, uses cloud hosting because it’s cheaper to have someone else manage your hardware failures until you’re using a ton (probably literally) of hardware.

Overall, though, these environments tend to abstract their complexity into isolated parts. No developer or technician can know everything. The result is that over time, the most complicated code to write has generally been abstracted out. The prime examples are the widely used environmental components world-class experts write and maintain instead of in-house developers: web servers, networks, NOCs, and database engines. Thus your ‘need to know’ as a standard developer will rarely include storing millions of data points in a B-Tree, figuring out how to keep a database from having race conditions, or avoiding memory leaks during a web server’s 99.9999% uptime window.

Many modern environments contain ORMs, to the point where database development has reduced value as a career option. After writing stored procedures containing 1000+ lines of code, having someone say, “SQL is cool, but you can’t actually do much with it” to me once was… disheartening. But there’s a reason for this: as a best practice, code shouldn’t be stored in your database. Modern ORMs such as Entity Framework, Django’s models, and Hibernate (which I have not used) abstract the database from code. There is definitely, however, a tradeoff between having a Database Administrator able to tune the data structure for performance and having programmers able to create freely.

But mostly, ORMs also abstract out the communication between two systems: code and data. Communication standards often end up very complex. The result? They tend to become abstracted. While teaching Python at a boot-camp, one of my students wanted to use the Twitter API in an in-depth way. Twitter’s API was broad and full-featured. That said, some specific features were only available if you used OAuth 1 rather than OAuth 2.

It turns out that where OAuth 2 had some simplistic, reliable steps OAuth 1 was… byzantine. This caused the development of the more simplistic OAuth 2 standard and various libraries designed to manage handshaking. Generally, it makes a lot of sense to try and keep your communication methods as simple as possible and your data clean. For this reason, TCP/IP communications are generally done with libraries and data passed with JSON. Websites use REST. Compartmentalization and reliable low-time-investment interfaces are the norm, and should be.

And making that low-time-investment communication work consistently requires tests. Writing tests isn’t fun at first. You know what you want to do, and you know your use cases, so why write tests? Because it requires you to think a second time and will send you in a better direction overall.

Unit tests weren’t a core best practice until Continuous Integration was possible, but now that it is, working with them is fundamental. End-to-end tests are harder to set up, but are now also a core best practice. This is because as development gets faster, developers become responsible for more of the system.

If the system is broken, the time you spend fixing it is time you could be using to build something new. So in the long run, front-loading all kinds of automated testing allows you to reduce the overall time you spend on site upkeep. And, moreover, allows you to sleep better (you don’t realize how much fun sleep can be until you’re woken up at 3:00am to hunt for a money transfer log). Because testing is so important, many teams use Test Driven Development, whose cornerstone is writing the tests before you write a single line of ‘code’ that solves your problem.

Finally, in making your environment simple and solid, I recommend documentation. There isn’t such a thing as self-documenting code. Picking simple, descriptive variable names goes a long way, but putting a little block of explanation at the top of a class or function won’t kill you. I promise. It may, instead, help you find a money transfer in the logs in the middle of the night.

Avoid Simple Security Issues

Nobody knows anything about digital security. I bet you five dollars you can put ‘data breach’ into the search engine of your choice right now and find one. This is partially because the world is big and security is hard, but primarily because any system has security holes. So your primary job as a developer isn’t actually to make your systems bulletproof, but to avoid putting a big ‘come in here’ sign on them.

The times that my code has been breached have been when I used default passwords, or had wide open development back doors I closed only to have someone else open again. (Swear. Absolutely. Never my fault, even that one time.) Generally, you want to avoid the simplest stuff and hope you’re a harder target than the next app or database.

Intra- and inter-process communication security is where your personally-built security flaws will be. Thus, REST frameworks and simplistic object communications are industry standards. Where this starts to go wrong is where you take simple objects you have been passed and run them as inputs programmatically. E.g., taking a JSON object from the HTTP post, deserializing it, and throwing a variable from there into a query directly as a string. This means that when you pass variables around, you want to be as certain of their type as possible.

Where your code ends — and your libraries begin — is where the security errors you haven’t personally produced will arise. When I look at an outdated library, I also look at the patch notes for the new version. The reason Microsoft patches your computer twice a week isn’t because they like spending money on programmers. It’s because an OS as widely used as Windows has thousands of programmers trying to break it all the time. If your local copy of Windows is outdated, you’re opening yourself to more vulnerabilities. Your servers and frameworks are not much different.

On the personal front, you don’t want your memory to be a single-point-of-failure either. If you’re in charge of systems (which, as the job of ‘developer’ gets bigger, is more likely) you’ll eventually have a ton of passwords, SSH keys, server certificates, etc to manage. They’re generally text-based, so I suggest finding a password manager and using it. (Like Troy Hunt, I use KeePass and it’s free). It also generates passwords, so you don’t have ‘Dragons94!’ as your password to 75 different sites and systems. Use a generator to make a big one, copy your password file every once in a while, and move on. Also, rotate these passwords and keys regularly. Don’t end up on Slashdot.

Finally, avoid query injection. It’s simple to do, and almost doesn’t get a mention anymore because ORMs/REST frameworks tend to block it. Not perfectly, of course, but well enough. If you’re going to run something that comes from inter- or intra-process communication, just make sure it’s only one query before you run it. Done.

Source Control, Build Integration, and Test Integration

The final current core best practices I definitely should mention are source control, build integration and test integration. If you aren’t using source control, you aren’t aware of where your code is changing, or how it has changed. Additionally, if you’re not using it, you are going to be more lost than necessary.

As to integration, Jenkins and TeamCity feel like someone has invented fire, if you haven’t used them yet. For further reading, this is a good start. Having a system that runs your unit tests and builds for you means deployment is reliable. Deployment being reliable means releases are more a question of “Are the code and the customer ready for each other?”, rather than “When, exactly, can we schedule all this so it will actually come together?”

I did some QA automation for a Wells Fargo group ten years ago, and releases were scheduled once a quarter, starting at 2:00 AM with 2-3 hours of deployment and 4-n [N being, in some cases, 28] hours of testing. Not all of the testing we needed to do could be done with an integration server now, but the releases themselves would have gone smoother with current technology. And to use better technology, you need to know what it is, and the current trends.

3. Tracking Industry Trends

Processor speed and efficiency may have doubled every few years for decades, but programming relies on humans — and we change much slower. If you keep an eye on new tools and practices others are using to produce software, you can adopt them to assist your productivity as well.

The best place to find out what new technologies and practices are efficient are job postings. Many jobs (especially at small companies) never hit broad posting boards, so once a company is advertising aggressively for a position, they are looking for something specific they cannot find among their development managers’ existing contacts. Thus, when a company is willing to take the risk of hiring a complete unknown, they generally try to state exactly what technical competencies they want that complete unknown to have.

Smaller, newer companies tend to be using the most up-to-date technologies. This is primarily because changing their culture focuses them towards low-investment projects with quick returns, but there are also several other factors at work, including the following:

- Turnover at smaller companies is higher, and technology workers have an easier time finding the next job with up-to-date experience

- Project owners at small companies tend to have more power to pick their own technologies

- Smaller projects mean that using new software is a lower risk

Larger companies tend to contrast with this and adopt new technologies more conservatively. Reliability, cost/benefit ratios, and risk are far more important to a traditional company with business continuity requirements. Thus, if you look at job postings, you will quite often notice that large companies are two-to-four years behind the cutting edge of technology.

Every year, Stack Overflow asks all its developers in which languages they’re programming, and wanting to develop . If you find out what other programmers want to learn, two or three later it will be (if not too complicated — I’m looking at you, CULisp) much more widely used. Developers generally want to stay employed, so they seek to align their learning with potential employers’ future needs. Thus, developers are continually attempting to replace the most inefficient portions of their time by using more efficient tools. That said, the most time-consuming part of software development will always be adjusting what tools and code can do to the end-user functionality people and businesses use.

If you go even deeper than these basic sources of information, you may find that there are other places to watch for what is up and coming. Slashdot, Quora, Reddit, and other forum/online meeting places contain more information — which is often unreliable, and should be checked against other sources — than standard media outlets. Learning what language will be hot next is often a matter of reading industry trends working developers believe are worthwhile. But knowing what these trends are isn’t enough; you’re going to need to practice them yourself.

4. Best Practices and Your Career

In some ways, the best approach to learning software development methods is to switch jobs. Technologies and practices have similar adoption curves — steep at the beginning, followed by slow growth if they become widely used, and then a slow decline. The biggest difference between various technologies is whether there is wide adoption, and lifecycle length. Therefore, if you are in a field like front-end development, the growth and adoption curve of a technology can happen entirely within a span of years, and the decline won’t involve very much maintenance work. The result? If you want to stay relevant within your front-end development career, you can’t rest on your laurels.

How to Choose Your Next Engineering Job

Generally, when you are looking for a new position, I recommend that you look for three things. Let’s break these down.

Learning: First and foremost, you want the new position to teach you something. As an experienced developer, you will always have the option of choosing between two types of work environments: those in which you have extensive experience, or new ones.. It is more likely you will get paid more for working with technologies you already know. That said, if you mix technologies you are partially familiar with, and technologies you do not know, you can add value while also acquiring additional talents/bullet points on your resume.

Enjoyment: The second most important thing about any position, technically, is to pick problems and situations you enjoy. The more you enjoy the problems you are solving, the more willing you will be to expand your skillset and put in the time/effort to excel at what you do.

Suitability: Thirdly, know the nature of the company. Small companies tend to give their employees a massive array of responsibilities. At a small enough company, you can’t have an accurate job title; I am currently responsible for AWS instance management, TeamCity build processes, database administration, programming in five or six different languages, release notifications, hotfixes, architectural decisions, and so on.

In previous positions, I have had single areas of responsibility (verifying and rectifying accounting reconciliation results for an internal billing department, for example), and have found that the larger the company, the more my time focused on a single set of tasks. Generally, if you want to deepen your understanding of a specific set of tasks, you will be better served at a large company. Working with extensive, diverse responsibilities (and a limited safety net) will most often happen at a small one.

Most often, a company will stick with the technologies and practices it is working with when you start working there (unless you are hired to implement something new!). However, the smaller and more flexible the company, the more likely you will be empowered to implement whatever tool you see fit. Take advantage of this, research the most viable solutions to your hardest problems, and make them work.

Conversely, there may be advantages to working for a bigger company, in that if you can get permission to implement a new system or practice, you may also get training in how to do so. Additionally, disastrous failure is more likely to be tolerated at a larger company, so the risk in acquiring familiarity with a new technology is significantly lower.

Further Education

Some best practices are easiest to acquire in a more formal scholastic (or other educational) setting. While it is easy enough to acquire a new language on your own, it is sometimes quite difficult to push yourself to learn the ins and outs of deeper topics. A solid grounding in theory is useful for maintaining a steady career, as is the gravitas of advanced education or fresh, hot boot camp credentials.

The market for programmers is currently booming. I have not seen it as strong since 1999ish. If you enjoy programming for its own sake, and the market takes a bit of a downturn, advanced degrees are an excellent investment when employment is tougher to find. Becoming more versatile and understanding how technologies are built will make you more employable in the long run. An after-work (or while unemployed) expenditure for 2-4 years can make a significant boost to your lifetime income, as well as add job security.

But if you’re in a hurry, bootcamps are also a good place to learn whatever’s new and upcoming. One of the current ‘best practices’ for finding a new job is having a solid GitHub repository of code you have created. If you create this repository at a bootcamp, as well as an interesting capstone, that boot camp may be time well spent, even for an experienced developer. Codementor would be a great place to get guidance in how to build a GitHub codebase to impress.

As I travel down the ‘time investment’ ladder, conferences are a good place to find out what’s hot, as well as being able to take workshops with developers who have created the latest technology. Amazon runs monthly (at minimum) events on AWS, and all sorts of other events are scheduled regularly. Presenters and educators at any conference will be biased in favor of the technologies they have spent years developing, working with, or are paid to support. That said, because they are so invested in a particular technology, they are generally informative and interested in helping you expand your knowledge base with their chosen technologies.

Specific technology training/certification is always available. I know, somewhere in the back of my head, that my life would be much easier if I had spent six months getting Oracle DBA certified in my early- to mid-twenties. Small companies don’t respect certifications all that much, but large companies see certifications as mitigation of their risks. If you are certified with a particular technology, it is more likely (in their eyes) that you will be competent with that specific technology in an expected way. Thus, getting certified can be a large step towards a comparatively stable career in a tumultuous market. As a warning, it can also be a large investment in a technology that disappears from the job market.

Open source contribution is always available. While OS software is notoriously messy, working on several projects will help you understand what standards would help in an ideal world. While this may be a scary step for a newer developer, it’s worth trying. Having worked with a number of professionally-built code bases, my bet is if you wanted to walk in and write logical comments/documentation in the top 10 open source projects, you could start tomorrow and nobody would complain (as long as your contributions make sense!).

I found that teaching through Codementor taught me a tremendous amount. Every question a student had was completely new to me, and I spent quite a bit of time researching and fixing things I didn’t know could break. This type of experience was mirrored during the time I spent teaching a boot camp.

The absolute lowest investment way to learn some new methods for code management is to go to a hackathon. I have only made one ‘viable fun product’ (a game) during such a hackathon, but I learned a ton from a wide variety of other programmers.

5. Conclusion

As a developer, learning and using best practices is the cornerstone of maintaining a solid career. The only constant in the computer industry is change, and to fall behind the curve is costly. By continually watching the way the industry shifts, you can produce better code more quickly. By producing better code more quickly, you can make more effective solutions.

There are many resources detailing how others have improved their code quality, or built businesses on code, but all of the technologies you use during your career will certainly change or morph while you are building with them. Stay aware of your environment and your career can thrive.