On an expedition with the Schmidt Ocean Institute off the coast of San Diego in August 2021, MBARI sent the pair of tools—along with a specialized DNA sampling apparatus—hundreds of meters deep to explore the midwaters. The researchers used the cameras to scan at least two unnamed creatures, a new ctenophore and siphonophore.

JOOST DANIELS © 2021 MBARI

The successful scans strengthen the case for virtual holotypes—digital, rather than physical, specimens that can serve as the basis for a species definition when collection isn’t possible. Historically, a species’ holotype has been a physical specimen meticulously captured, preserved, and catalogued—an anglerfish floating in a jar of formaldehyde, a fern pressed in a Victorian book, or a beetle pinned to the wall of a natural history museum. Future researchers can learn from these and compare them with other specimens.

Proponents say virtual holotypes like 3D models are our best chance at documenting the diversity of marine life, some of which is on the precipice of being lost forever. Without a species description, scientists can’t monitor populations, identify potential hazards, or push for conservation measures.

“The ocean is changing rapidly: increasing temperatures, decreasing oxygen, acidification,” says Allen Collins, a jelly expert with dual appointments at the National Oceanic and Atmospheric Administration and the Smithsonian National Museum of Natural History. “There are still hundreds of thousands, perhaps even millions, of species to be named, and we can’t afford to wait.”

Jelly in four dimensions

Marine scientists who research gelatinous midwater creatures all have horror stories of watching potentially new species disappear before their eyes. Collins recalls trying to photograph ctenophores in the wet lab of a NOAA research ship off the coast of Florida: “Within a few minutes, because of either the temperature or the light or the pressure, they just started falling apart,” he says. “Their bits just started coming off. It was a horrible experience.”

Kakani Katija, a bioengineer at MBARI and the driving force behind DeepPIV and EyeRIS, didn’t set out to solve the midwater collector’s headache. “DeepPIV was developed to look at fluid physics,” she explains. In the early 2010s, Katija and her team were studying how sea sponges filter-feed and wanted a way to track the movement of water by recording the three-dimensional positions of minute particles suspended in it.

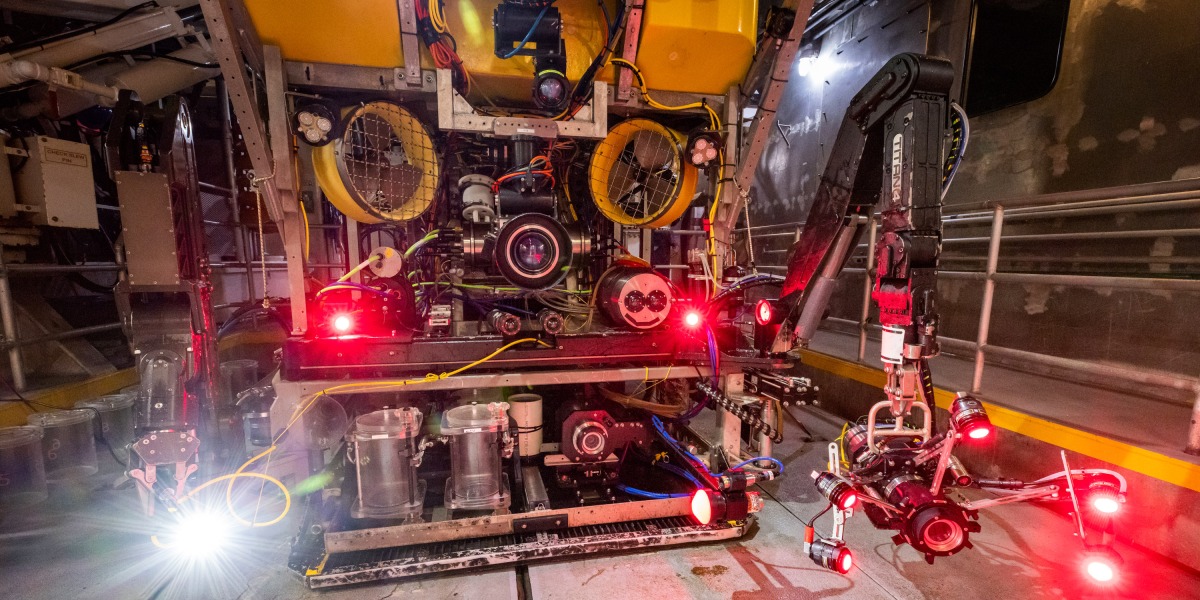

They later realized the system could also be used to noninvasively scan gelatinous animals. Using a powerful laser mounted on a remotely operated vehicle, DeepPIV illuminates one cross-section of the creature’s body at a time. “What we get is a video, and each video frame ends up as one of the images of our stack,” says Joost Daniels, an engineer in Katija’s lab who’s working to refine DeepPIV. “And once you’ve got a stack of images, it’s not much different from how people would analyze CT or MRI scans.”

Ultimately, DeepPIV produces a still 3D model—but marine biologists were eager to observe midwater creatures in motion. So Katija, MBARI engineer Paul Roberts, and other members of the team created a light-field camera system dubbed EyeRIS that detects not just the intensity but also the precise directionality of light in a scene. A microlens array between the camera lens and image sensor breaks the field down into multiple views, like the multi-part vision of a housefly.

PAUL ROBERTS © 2021 MBARI

EyeRIS’s raw, unprocessed images look like what happens when you take your 3D glasses off during a movie—multiple offset versions of the same object. But once sorted by depth, the footage resolves into delicately rendered three-dimensional videos, allowing researchers to observe behaviors and fine-scale locomotive movements (jellies are experts at jet propulsion).

What’s a picture worth?

Over the decades, researchers have occasionally attempted to describe new species without a traditional holotype in hand—a South African bee fly using only high-definition photos, a cryptic owl with photos and call recordings. Doing so can incur the wrath of some scientists: in 2016, for example, hundreds of researchers signed a letter defending the sanctity of the traditional holotype.

But in 2017, the International Commission on Zoological Nomenclature—the governing body that publishes the code dictating how species should be described—issued a clarification of its rules, stating that new species can be characterized without a physical holotype in cases where collection isn’t feasible.

In 2020, a team of scientists including Collins described a new genus and species of comb jelly based on high-definition video. (Duobrachium sparksae, as it was christened, looks something like a translucent Thanksgiving turkey with streamers trailing from its drumsticks.) Notably, there was no grumbling from the taxonomist peanut gallery—a win for advocates of digital holotypes.

Collins says the MBARI team’s visualization techniques only strengthen the case for digital holotypes, because they more closely approximate the detailed anatomical studies scientists conduct on physical specimens.

A parallel movement to digitize existing physical holotypes is also gaining steam. Karen Osborn is a midwater invertebrate researcher and curator of annelids and peracarids—animals much more substantial and easier to collect than the midwater jellies—at the Smithsonian National Museum of Natural History. Osborn says the pandemic has underlined the utility of high-fidelity digital holotypes. Countless field expeditions have been scuttled by travel restrictions, and annelid and peracarid researchers “haven’t been able to go in [to the lab] and look at any specimens,” she explains, so they can’t describe anything from physical types right now. But study is booming through the digital collection.

Using a micro-CT scanner, Smithsonian scientists have given researchers around the world access to holotype specimens in the form of “3D reconstructions in minute detail.” When she gets a specimen request—which typically involves mailing the priceless holotype, with a risk of damage or loss—Osborn says she first offers to send a virtual version. Although most researchers are initially skeptical, “without fail, they always get back to us ‘Yeah, I don’t need the specimen. I’ve got all the information I need.’”

“EyeRIS and DeepPIV give us a way of documenting things in situ, which is even cooler,” Osborn adds. During research expeditions, she’s seen the system in action on giant larvaceans, small invertebrates whose intricate “snot palaces” of secreted mucus scientists had never been able to study completely intact—until DeepPIV.

Katija says the MBARI team is pondering ways to gamify species description along the lines of Foldit, a popular citizen science project in which “players” use a video-game-like platform to determine the structure of proteins.

In the same spirit, citizen scientists could help analyze the images and scans taken by ROVs. “Pokémon Go had people wandering their neighborhoods looking for fake things,” Katija says. “Can we harness that energy and have people looking for things that aren’t known to science?”

Elizabeth Anne Brown is a science journalist based in Copenhagen, Denmark.