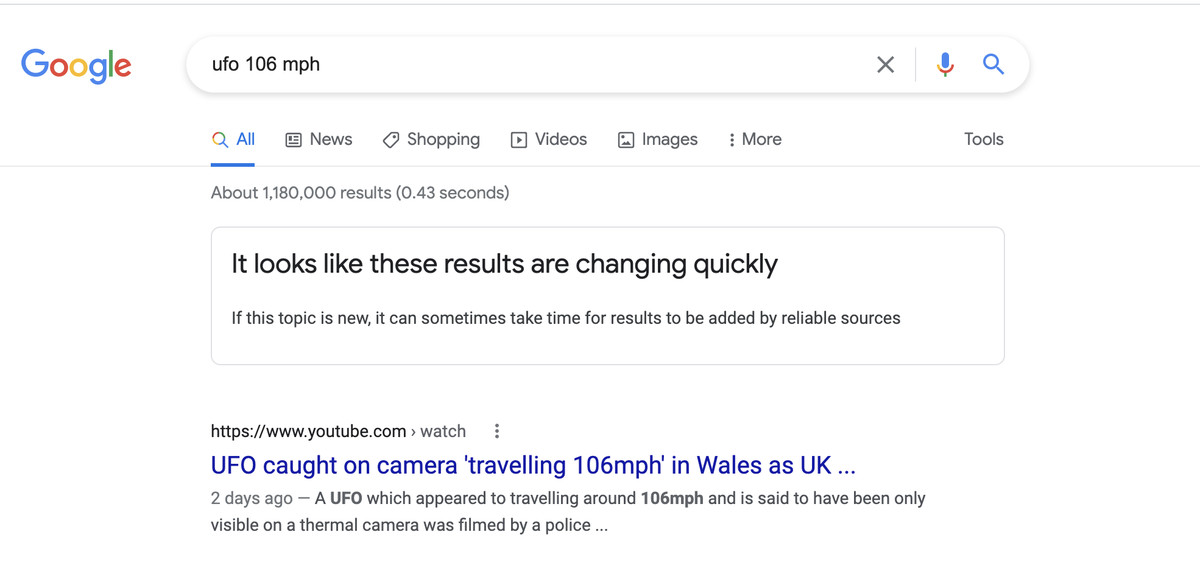

Google is testing a new feature to notify people when they search for a topic that may have unreliable results. The move is a notable step by the world’s most popular search engine to give people more context about breaking information that’s popular online — like suspected UFO sightings or developing news stories — that are actively evolving.

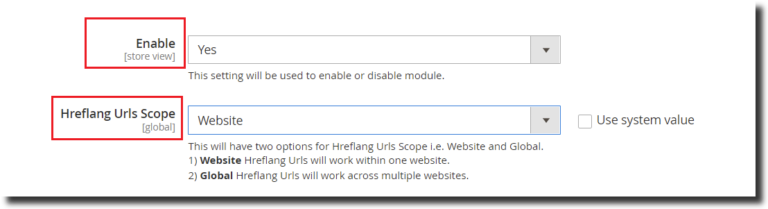

The new prompt warns users that the results they are seeing are changing quickly, and reads, in part, “If this topic is new, it can sometimes take time for results to be added by reliable sources.” Google confirmed to Recode that it started testing the feature about a week ago. Currently, the company says the notice is only showing up in a small percentage of searches, which tend to be about developing trending topics.

Companies like Google, Twitter, and Facebook have often struggled to handle the high volume of misinformation, conspiracy theories, and unverified news stories that run rampant on the internet. In the past, they have largely stayed away from taking content down in all but the most extreme cases, citing a commitment to free speech values. During the Covid-19 pandemic and the 2020 US elections, some companies took the unprecedented action of taking down popular accounts perpetuating misinformation. But the kind of label Google is rolling out — which simply warns users without blocking content — reflects a more long-term incremental approach to educating users about questionable or incomplete information.

“When anybody does a search on Google, we’re trying to show you the most relevant, reliable information we can,” said Danny Sullivan, a public liaison for Google Search. “But we get a lot of things that are entirely new,”

Sullivan said the notice isn’t saying that what you’re seeing in search results is right or wrong — but that it’s a changing situation, and more information may come out later.

As an example, Sullivan cited a report about a suspected UFO sighting in the UK.

“Someone had gotten this police report video released out in Wales, and it’s had a little bit bit of press coverage. But there’s still not a lot about it,” said Sullivan. “But people are probably searching for it, they may be going around on social media — so we can tell it’s starting to trend. And we can also tell that there’s not a lot of necessarily great stuff that’s out there. And we also think that maybe new stuff will come along,”

Other examples of trending search queries that could currently prompt the notice are “why is britney on lithium” and “black triangle ufo ocean”

The feature builds on Google’s recent efforts to help users with “search literacy,” or to better understand context about what they’re looking up. In April 2020, the company released a feature telling people when there aren’t enough good matches for their search, and in February 2021, it added an “about” button next to most search results showing people a brief Wikipedia description of the site they’re seeing, when available.

Google told Recode it ran user research on the notice that showed people found it helpful.

The new prompt is also part of a larger trend by major tech companies to give people more context about new information that could turn out to be be wrong. Twitter, for example, released a slew of features ahead of the 2020 US elections cautioning users if information they were seeing was not yet verified.

Some social media researchers welcome the types of added context like the one Google rolled out today, including Renee DiResta at the Stanford Internet Observatory who tweeted about the feature. It’s a welcome alternative, they say, to the debates around whether or not to ban a certain account or post.

“It’s a great way of making people pause before they act on or spread information further,” said Evelyn Douek, a researcher at Harvard who studies online speech. “It doesn’t involve anyone making judgments about the truth or falsity of any story but just gives the readers more context. … In almost all breaking news contexts, the first stories are not the complete ones, and so it’s good to remind people of that.”

There are still some questions about how this all will work, though. For example, it’s not clear exactly what sources Google finds to be reliable on a given search result, and how many reliable sources need to weigh in before a questionable trending news topic loses the label. As the feature rolls out more broadly, we can likely expect to see more discussion about how it’s implemented.