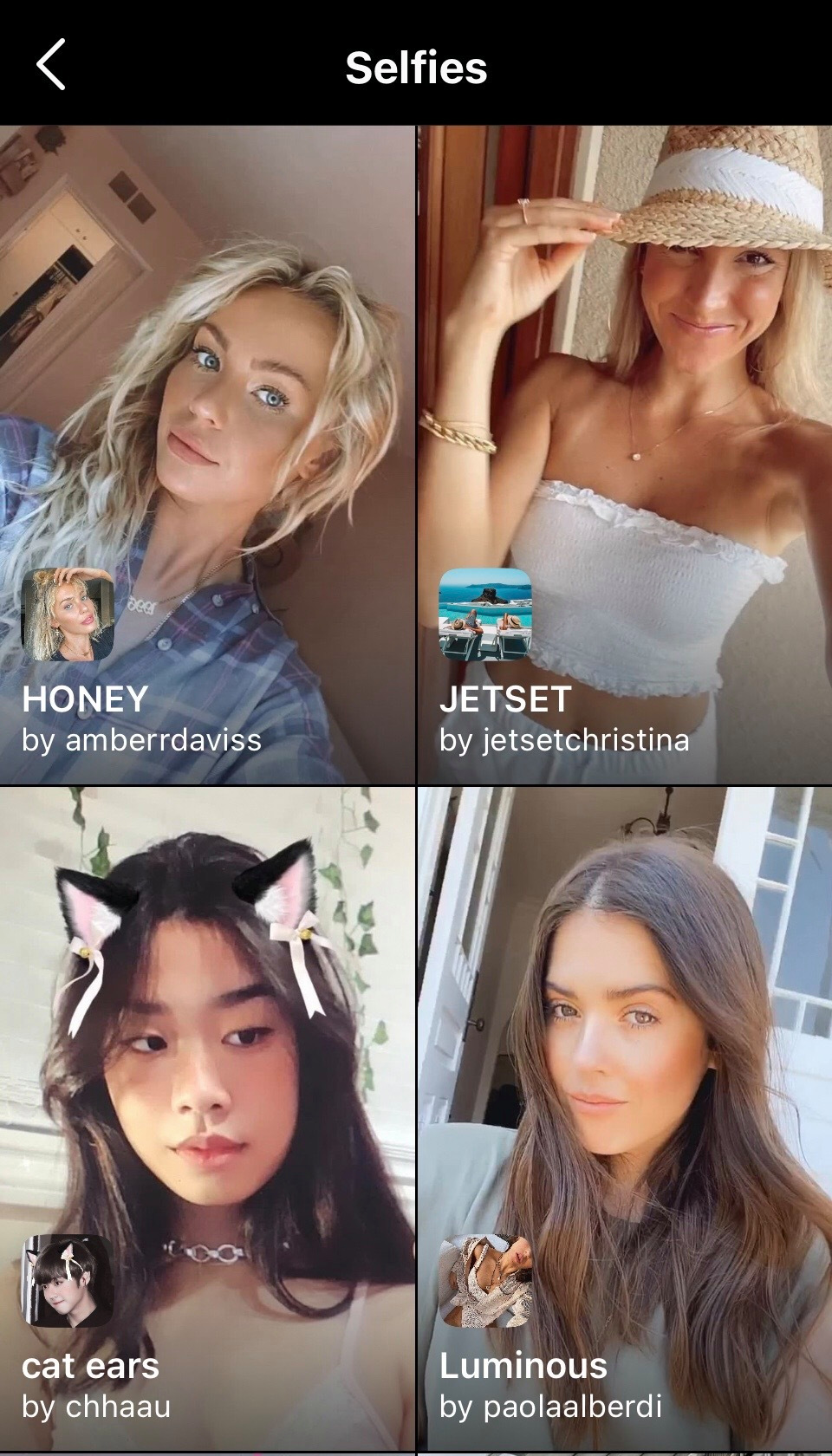

There are thousands of distortion filters available on major social platforms, with names like La Belle, Natural Beauty, and Boss Babe. Even the goofy Big Mouth on Snapchat, one of social media’s most popular filters, is made with distortion effects.

In October 2019, Facebook banned distortion effects because of “public debate about potential negative impact.” Awareness of body dysmorphia was rising, and a filter called FixMe, which allowed users to mark up their faces as a cosmetic surgeon might, had sparked a surge of criticism for encouraging plastic surgery. But in August 2020, the effects were re-released with a new policy banning filters that explicitly promoted surgery. Effects that resize facial features, however, are still allowed. (When asked about the decision, a spokesperson directed me to Facebook’s press release from that time.)

When the effects were re-released, Rocha decided to take a stand and began posting condemnations of body shaming online. She committed to stop using deformation effects herself unless they are clearly humorous or dramatic rather than beautifying and says she didn’t want to “be responsible” for the harmful effects some filters were having on women: some, she says, have looked into getting plastic surgery that makes them look like their filtered self.

“I wish I was wearing a filter right now”

Krista Crotty is a clinical education specialist at the Emily Program, a leading center on eating disorders and mental health based in St. Paul, Minnesota. Much of her job over the past five years has focused on educating patients about how to consume media in a healthier way. She says that when patients present themselves differently online and in person, she sees an increase in anxiety. “People are putting up information about themselves—whether it’s size, shape, weight, whatever—that isn’t anything like what they actually look like,” she says. “In between that authentic self and digital self lives a lot of anxiety, because it’s not who you really are. You don’t look like the photos that have been filtered.”

“There’s just somewhat of a validation when you’re meeting that standard, even if it’s only for a picture.”

For young people, who are still working out who they are, navigating between a digital and authentic self can be particularly complicated, and it’s not clear what the long-term consequences will be.

“Identity online is kind of like an artifact, almost,” says Claire Pescott, the researcher from the University of South Wales. “It’s a kind of projected image of yourself.”

Pescott’s observations of children have led her to conclude that filters can have a positive impact on them. “They can kind of try out different personas,” she explains. “They have these ‘of the moment’ identities that they could change, and they can evolve with different groups.”

But she doubts that all young people are able to understand how filters affect their sense of self. And she’s concerned about the way social media platforms grant immediate validation and feedback in the form of likes and comments. Young girls, she says, have particular difficulty differentiating between filtered photos and ordinary ones.

Pescott’s research also revealed that while children are now often taught about online behavior, they receive “very little education” about filters. Their safety training “was linked to overt physical dangers of social media, not the emotional, more nuanced side of social media,” she says, “which I think is more dangerous.”

Bailenson expects that we can learn about some of these emotional unknowns from established VR research. In virtual environments, people’s behavior changes with the physical characteristics of their avatar, a phenomenon called the Proteus effect. Bailenson found, for example, that people who had taller avatars were more likely to behave confidently than those with shorter avatars. “We know that visual representations of the self, when used in a meaningful way during social interactions, do change our attitudes and behaviors,” he says.

But sometimes those actions can play on stereotypes. A well-known study from 1988 found that athletes who wore black uniforms were more aggressive and violent while playing sports than those wearing white uniforms. And this translates to the digital world: one recent study showed that video game players who used avatars of the opposite sex actually behaved in a way that was gender stereotypical.

Bailenson says we should expect to see similar behavior on social media as people adopt masks based on filtered versions of their own faces, rather than entirely different characters. “The world of filtered video, in my opinion—and we haven’t tested this yet—is going to behave very similarly to the world of filtered avatars,” he says.

Selfie regulation

Considering the power and pervasiveness of filters, there is very little hard research about their impact—and even fewer guardrails around their use.

I asked Bailenson, who is the father of two young girls, how he thinks about his daughters’ use of AR filters. “It’s a real tough one,” he says, “because it goes against everything that we’re taught in all of our basic cartoons, which is ‘Be yourself.’”

Bailenson also says that playful use is different from real-time, constant augmentation of ourselves, and understanding what these different contexts mean for kids is important.

“Even though we know it’s not real… We still have that aspiration to look that way.”

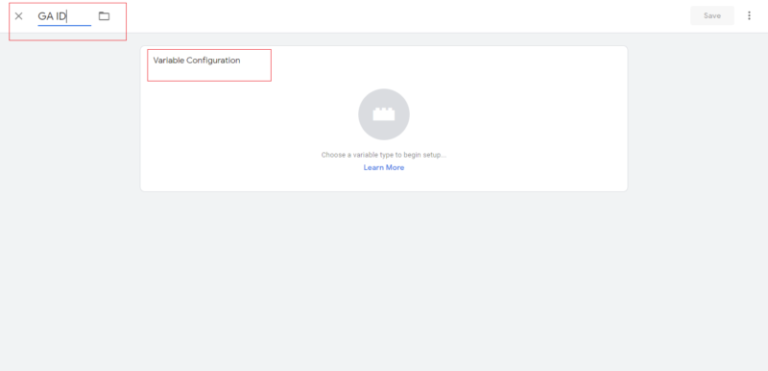

What few regulations and restrictions there are on filter use rely on companies to police themselves. Facebook’s filters, for example, have to go through an approval process that, according to the spokesperson, uses “a combination of human and automated systems to review effects as they are submitted for publishing.” They are reviewed for certain issues, such as hate speech or nudity, and users are also able to report filters, which then get manually reviewed.

The company says it consults regularly with expert groups, such as the National Eating Disorders Association and the JED Foundation, a mental-health nonprofit.

“We know people may feel pressure to look a certain way on social media, and we’re taking steps to address this across Instagram and Facebook,” said a statement from Instagram. “We know effects can play a role, so we ban ones that clearly promote eating disorders or that encourage potentially dangerous cosmetic surgery procedures… And we’re working on more products to help reduce the pressure people may feel on our platforms, like the option to hide like counts.”

Facebook and Snapchat also label filtered photos to show that they’ve been transformed—but it’s easy to get around the labels by simply applying the edits outside of the apps, or by downloading and reuploading a filtered photo.

Labeling might be important, but Pescott says she doesn’t think it will dramatically improve an unhealthy beauty culture online.

“I don’t know whether it would make a huge amount of difference, because I think it’s the fact we’re seeing it, even though we know it’s not real. We still have that aspiration to look that way,” she says. Instead, she believes that the images children are exposed to should be more diverse, more authentic, and less filtered.

There’s another concern, too, especially since the majority of users are very young: the amount of biometric data that TikTok, Snapchat and Facebook have collected through these filters. Though both Facebook and Snapchat say they do not use filter technology to collect personally identifiable data, a review of their privacy policies shows that they do indeed have the right to store data from the photographs and videos on the platforms. Snapchat’s policy says that snaps and chats are deleted from its servers once the message is opened or expires, but stories are stored longer. Instagram stores photo and video data as long as it wants or until the account is deleted; Instagram also collects data on what users see through its camera.

Meanwhile, these companies continue to concentrate on AR. In a speech made to investors in February 2021, Snapchat co-founder Evan Spiegel said “our camera is already capable of extraordinary things. But it is augmented reality that’s driving our future”, and the company is “doubling down” on augmented reality in 2021, calling the technology “a utility”.

And while both Facebook and Snapchat say that the facial detection systems behind filters don’t connect back to the identity of users, it’s worth remembering that Facebook’s smart photo tagging feature—which looks at your pictures and tries to identify people who might be in them—was one of the earliest large-scale commercial uses of facial recognition. And TikTok recently settled for $92 million in a lawsuit that alleged the company was misusing facial recognition for ad targeting. A spokesperson from Snapchat said “Snap’s Lens product does not collect any identifiable information about a user and we can’t use it to tie back to, or identify, individuals.”

And Facebook in particular sees facial recognition as part of it’s AR strategy. In a January 2021 blog post titled “No Looking Back,” Andrew Bosworth, the head of Facebook Reality Labs, wrote: “It’s early days, but we’re intent on giving creators more to do in AR and with greater capabilities.” The company’s planned release of AR glasses is highly anticipated, and it has already teased the possible use of facial recognition as part of the product.

In light of all the effort it takes to navigate this complex world, Sophia and Veronica say they just wish they were better educated about beauty filters. Besides their parents, no one ever helped them make sense of it all. “You shouldn’t have to get a specific college degree to figure out that something could be unhealthy for you,” Veronica says.