Facebook wants to reintroduce users to its algorithms.

On Wednesday, within the span of a few hours, the company took several steps to encourage users to trust its ranking and recommendation systems. In a blog post, Facebook said it would make it easier for users to control what’s in their feeds, pointing to both new and existing tools. In an apparent attempt to buttress that announcement, Facebook’s vice president of global affairs Nick Clegg published a 5,000-word Medium post defending the company’s ranking algorithms and repudiating the argument that those algorithms create dangerous echo chambers. Clegg also defended Facebook’s algorithms in a wide-ranging interview with the Verge published on the same day.

Taken together, these moves appear to be a concerted campaign by Facebook to repair the negative reputation of its algorithms, which many say actively encourage and incentivize political polarization, misinformation, and extreme content. The efforts come as the company faces heavy criticism from lawmakers for its platform design, and just a week after CEO Mark Zuckerberg testified to Congress during a hearing on misinformation.

already existed (Recode wrote about both last year). “Favorites” allows users to select up to 30 sources to prioritize in their feed, and “Why am I seeing this?” offers an explanation for why a certain piece of content appeared in their feed. In its Wednesday announcement, Facebook said it would also institute a “Feed Filter” tool, which will enable users to switch between an algorithmically curated feed, a feed based on the pages they’ve “favorited,” and a reverse-chronological feed. Notably, there’s no way to switch to the reverse-chronological version permanently. Facebook also said that it will allow users some control over who can comment on individual posts, following in the footsteps of Twitter.

In his Wednesday Medium post, Clegg argues that the newly announced News Feed features provide users with algorithmic choice and transparency, and that Facebook takes steps to demote clickbait and false or harmful content. He claims that it’s not in Facebook’s commercial interest to promote extreme content, saying that advertisers — the key to Facebook’s business model — don’t like this content. Clegg also told the Verge that if Facebook wants to keep its users for the long term, there’s no reason for the company “to give people the kind of sugar rush of artificially polarizing content.”

But Facebook’s longtime critics don’t seem to be buying these arguments.

“Nick Clegg’s Medium post is a cynical, breathtaking display of gaslighting on a scale hard to fathom even for Facebook,” a spokesperson for the Real Facebook Oversight Board, a group of scholars and activists critical of Facebook, told Recode. “Clegg asks, ‘Where does FB’s incentive lie?’ A better question might be: Where does Nick Clegg’s incentive lie? The answer to that is clear.”

“Facebook managed to both insult its users for being too dimwitted to understand how its algorithms work while also blaming them for taking advantage of them too effectively,” said Ashley Boyd, the vice president for advocacy at Mozilla, in a Wednesday statement. “The News Feed controls unveiled today amount to nothing more than an admission that its algorithms are the problem.”

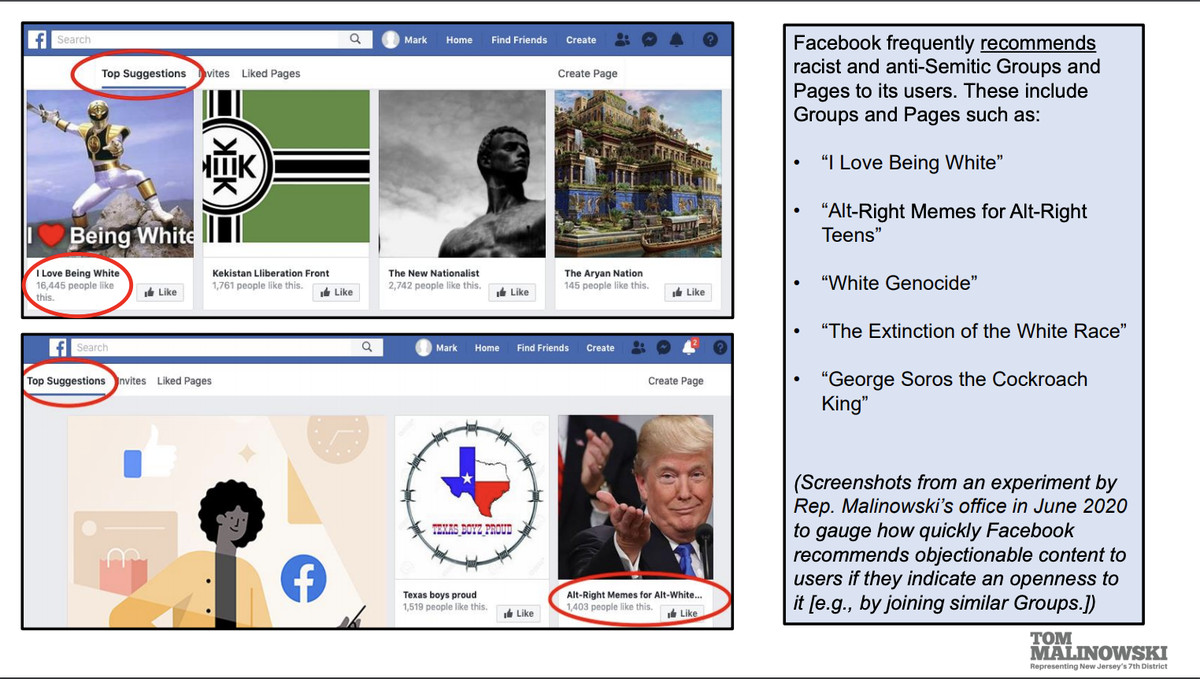

Over the years, journalists and researchers have documented repeated incidents of misinformation, extremist content, and hateful speech being promoted on Facebook. For example, this year, a man who became an FBI informant for a plot to kidnap the governor of Michigan reportedly said he came across the group because it was suggested by Facebook. The list of the most-engaged posts on Facebook every day is frequently topped by far-right sources, and researchers from New York University found that accounts sharing misinformation tend to get more engagement than other sources. Meanwhile, a research effort from Rep. Tom Malinowski’s office found that Facebook’s systems recommended groups with names like “George Soros the Cockroach King” and “I Love Being White.”

Kelley Cotter, a postdoctoral scholar at Arizona State University, told Recode that the purpose of Clegg’s post seemed to be to “‘manufacture consent’ to surveillance capitalism,” and she emphasized that engagement algorithms are, in fact, key to Facebook’s business model. “[Facebook is], at the end of the day, a public corporation that generates the vast majority of their income by way of algorithms and data collection,” she said in an email.

Facebook’s latest actions come as enthusiasm for more oversight over social media algorithms has grown. In recent weeks, at least one member of Facebook’s oversight board, a group of experts and journalists the company has appointed to rule on tough content moderation decisions, has expressed interest in looking at the company’s ranking and recommendation systems. Last week, Reps. Anna Eshoo and Tom Malinowski reintroduced the Protecting Americans from Dangerous Algorithms Act, which would amend Section 230 and take away platforms’ liability protections in certain cases involving civil rights or terrorism.

Meanwhile, Facebook appears to be taking cues from Jack Dorsey, who has repeatedly referred to the idea of algorithmic choice as a way forward. Last week, the Twitter CEO said that Bluesky, a relatively new research initiative being funded by Twitter, is looking at building “open” recommendation systems that would give social media users more control (Twitter already allows users to toggle between reverse-chronological and engagement-based feeds). Now, Facebook is going in a similar direction, with Clegg telling the Verge that giving users more control over their feeds is “very much the direction we’re going.”

But overall, some say that Facebook’s Wednesday PR campaign seems out of touch with growing concerns about its algorithms and the type of content they amplify and prioritize.

“I think the main thing we have to wonder is why did Nick Clegg (Facebook, really) feel compelled to write this 5,000-word treatise on why algorithms are not a problem?” Cotter, of Arizona State, told Recode. “‘The lady doth protest too much, methinks.’”

Update Wednesday, March 31, 8:20 PM ET: This piece has been updated to include a statement from Mozilla’s vice president of advocacy.

Open Sourced is made possible by Omidyar Network. All Open Sourced content is editorially independent and produced by our journalists.